We are in an exciting image with the Hamamatsu company. They create high end instruments which capture full field microsopic images. We were able to upload a sample image provided by them.

See the image on Gigapan.Org

Our philosophy has always been that we have tools for capturing images, tools for stitching images, and a website for sharing and annotating those images. We don't care if you use 'our' tools - our excitement is to provide tools for capturing, processing, and sharing gigapixel imagery!

We don't have a sense of scale for the very small, and gigapan + microscopes (optical or SEM) can go a huge way towards opening the country of the very small to a wider public. Richard Feynman wrote There's plenty of room at the Bottom, but even if you have read that (more than once :-), you still don't get the sense of what 'small' means that you can get from seeing it in a Gigapan.

Tuesday, June 29, 2010

Thursday, June 17, 2010

Stitching Troubles

Monday, June 14, 2010

Ant and a Fly

The image you see here is of an ant holding a fly, taken using an optical microscope. The images is focused stacked and is a 4x6 mosaic stitched together. The image is magnified ~40x and is particularly interesting because it is a micro-gigapan of a specimen imaged last summer using a scanning electron microscope. It is neat to compare the two imaging techniques and notice the variations on what you can and cannot see through each microscope.

View the full image at GigaPan.org

This SEM image is composed of 288 images magnified around 400x.

View the full image at GigaPan.org

View the full image at GigaPan.org

This SEM image is composed of 288 images magnified around 400x.

View the full image at GigaPan.org

Thursday, June 10, 2010

Flower Power

This week I have been taking lots of micro-gigapans, both focused stacked and not, of flowers found outside my office. When I was outside I noticed that there were at least 8-10 different varieties of small flowers among the grass and was surprised by the diversity and wanted to capture it.

This gigapan was taken of a yellow flower that had been dried, the image is composed of 170 pictures taken through a table top optical microscope. The flower has been magnified ~40x. This image was not focused stacked and is 1.82 gigapixels.

View the full image at GigaPan.org

Here are two other flowers that I have imaged. The red flower is a mosaic of 24 images, each of which was focused stacked with 62 images at various focal points. This means that although the final image is only .16 gigapixels, we took a total of 1488 pictures.

The Purple Flower was just a quick focus stacked mosaic, it is composed of far fewer images than the red flower, and the flower was magnified ~40x.

View the full image at GigaPan.org

View the full image at GigaPan.org

This gigapan was taken of a yellow flower that had been dried, the image is composed of 170 pictures taken through a table top optical microscope. The flower has been magnified ~40x. This image was not focused stacked and is 1.82 gigapixels.

View the full image at GigaPan.org

Here are two other flowers that I have imaged. The red flower is a mosaic of 24 images, each of which was focused stacked with 62 images at various focal points. This means that although the final image is only .16 gigapixels, we took a total of 1488 pictures.

The Purple Flower was just a quick focus stacked mosaic, it is composed of far fewer images than the red flower, and the flower was magnified ~40x.

View the full image at GigaPan.org

View the full image at GigaPan.org

Tuesday, June 8, 2010

"I have nothing to offer but blood, toil, tears and sweat"

This is a 35 image non-focused stacked gigapan of a drop of blood magnified ~40 and taken through a optical microscope.

View the full image at GigaPan.org

View the full image at GigaPan.org

Friday, June 4, 2010

Tails

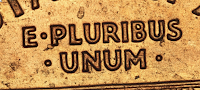

This is a micro-gigapan of a penny, it is composed of 367 images taken through an optical microscope. This image was not focused stacked but you can explore it here:

http://gigapan.o

rg/gigapans/50690/

rg/gigapans/50690/The front side of the penny had been imaged previously and can be viewed here: http://gigapan.org/gigapans/41737/

A Flea

If you haven't already had the pleasure of seeing a flea, or just wanted to see one up close for once, and not crawling on your dog, this micro gigapan is for you.

This image is an initial test to see the utility of imaging flea samples using the incident light microscope. It was composed of 525 images, focus stacked with 21 images deep in a 5 x 5 array.

This flea, along with some others, was given to us by the Carnegie Mellon museum of natural history.

Tuesday, June 1, 2010

Focus stacked micro-gigapan

Here is the first focused stacked micro-gigapan. It is composed an array of 16 images, each of which is composed of 20 images that are focus stacked. 320 images were taken to make this image, which is only 0.11 gigapixels

Here is another image to compare this focus stacked gigapan to. It is a 4x4 array stitched together, but instead of using the focus a single image. As you can see it is stacked images, we instead just used not nearly as in focus as the image above.

Friday, May 28, 2010

Maker Faire 2010

Small World Explorations had an exhibit at maker faire (May 22nd-23rd) in San Mateo CA. We explained our project as well as gigapans to hundreds of people over the course of the weekend and were greeted with a lot of enthusiasm by the maker community.

Wednesday, May 12, 2010

Getting Ready to Print

Using the Gigapan gives you the freedom to select the

aspect ratio, or shape, of you your pictures.

Since you specify the top left and bottom right of your

images you can make them short and wide, tall and narrow,

or anywhere in between.

This freedom is great until it collides with the physical

world with standard size monitors and paper.

Large format printers get paper (or canvas or vinyl) on rolls,

so the fixed factor is the width.

To calculate the length you need to print a Gigapan

on a certain width paper:

Length of Paper = height of paper * gigapan width/gigapan height

Example:

I have a 91084 x 17246 Gigapan and I want to print it on 44"

wide paper. So what is the length.

length = 44" * 91084 / 17246 = 232.4" = 19 feet.

232.4" x 44" = 10,225.6 square inches = 71 square feet

There are different rates, but I've been quoted $10 per square foot

to print. That would be $710, so I probably won't be printing that

GigaPan at that size until printing costs come down!

aspect ratio, or shape, of you your pictures.

Since you specify the top left and bottom right of your

images you can make them short and wide, tall and narrow,

or anywhere in between.

This freedom is great until it collides with the physical

world with standard size monitors and paper.

Large format printers get paper (or canvas or vinyl) on rolls,

so the fixed factor is the width.

To calculate the length you need to print a Gigapan

on a certain width paper:

Length of Paper = height of paper * gigapan width/gigapan height

Example:

I have a 91084 x 17246 Gigapan and I want to print it on 44"

wide paper. So what is the length.

length = 44" * 91084 / 17246 = 232.4" = 19 feet.

232.4" x 44" = 10,225.6 square inches = 71 square feet

There are different rates, but I've been quoted $10 per square foot

to print. That would be $710, so I probably won't be printing that

GigaPan at that size until printing costs come down!

Monday, May 3, 2010

More focus stacking fun.

Wednesday, April 28, 2010

First Results with Model 163 Microscope

Thanks to the nice people at the Maker Shed of Make Magazine we have an awesome new microscope to play with.

To prove the point that you don't need automation in order to capture a GigaPan style mosaic of images (assuming you have a good eye, and patience :-) I took the following gigapan of part of a 6 inch machinist's ruler. The numbers are tenths of an inch, and the hash marks are hundredths of an inch.

View the full image at GigaPan.org

This image was taken with the 4x objective, which normally has a 40x magnification, and a 4.5mm Field of View. The SLR camera adapter adds 2.5x of magnification, to 100x, and each frame has a 1.8mm field of view.

All thanks to Dan Woods and Rob Bullington at Make Magazine for giving us a huge discount on a 'Compound Oil Immersion Microscope model 163' It is like their model 162 but with a 3rd viewing port which comes straight up.

We also got a SLR Photo Adapter, with a 2.5x magnifying lens which ends in a T-Mount, and a T-Mount adapter for the Canon T2i camera.

The result is a comparatively inexpensive instrument which has a non-motorized xy stage and non-motorized focus knob. We are working to adapt the XY and Z axis to be controlled by stepper motors with an arduino running a slight variant of the G-Code CNC machine code from the Makerbot/RepRap code base (the variation is just ripping out some code and libraries which we don't need in order to make it compile a little easier).

To prove the point that you don't need automation in order to capture a GigaPan style mosaic of images (assuming you have a good eye, and patience :-) I took the following gigapan of part of a 6 inch machinist's ruler. The numbers are tenths of an inch, and the hash marks are hundredths of an inch.

View the full image at GigaPan.org

This image was taken with the 4x objective, which normally has a 40x magnification, and a 4.5mm Field of View. The SLR camera adapter adds 2.5x of magnification, to 100x, and each frame has a 1.8mm field of view.

All thanks to Dan Woods and Rob Bullington at Make Magazine for giving us a huge discount on a 'Compound Oil Immersion Microscope model 163' It is like their model 162 but with a 3rd viewing port which comes straight up.

We also got a SLR Photo Adapter, with a 2.5x magnifying lens which ends in a T-Mount, and a T-Mount adapter for the Canon T2i camera.

The result is a comparatively inexpensive instrument which has a non-motorized xy stage and non-motorized focus knob. We are working to adapt the XY and Z axis to be controlled by stepper motors with an arduino running a slight variant of the G-Code CNC machine code from the Makerbot/RepRap code base (the variation is just ripping out some code and libraries which we don't need in order to make it compile a little easier).

Monday, April 19, 2010

micro focus stacking tests

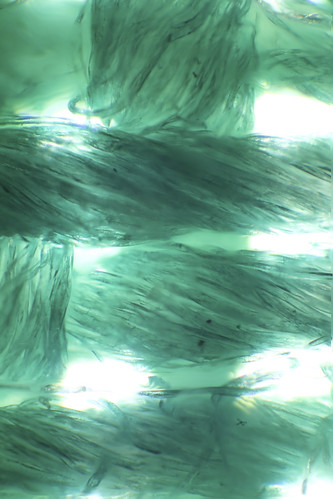

This weekend I managed to start playing around with focus stacking, using Zerene Stacker. I seem to be getting mixed results from the final output images. There are however quite a few variables to play with, modifying depth of field through aperture size both on the camera and the microscope, varying number of images in a stack, light conditions, etc. The images above show the preliminary results. They were all imaged using the same aperture settings, about mid setting on the microscope and 6.3f on the camera. Each set of images contains the final stacked image on the right and two of many (40-50) images used to create the final stack, giving some sense of the obstacle of a reduced depth of field. These images were taken at roughly ~200x, ~1000x, and ~2000x magnification. It's neat to compare these images to those taken with the SEM in the gigapan below:

Thursday, April 15, 2010

imaging the imager

The subject of this micro-gigapan is the optical sensor of a canon powershot G1. The chip is about 8mm long and 6mm wide. While the entire length of the chip was captured, only a small portion of the width was imaged due to the highly repetitive pattern of the light sensors.

The focus stacking feature has still yet to be implemented on this microscope. As such the chip had to be very level with imaging plane. The chip was leveled using a two degree of freedom rotation stage.

Friday, March 19, 2010

Recent Press - Boing Boing and CNet

We are always happy to get press!

We were boing boinged last week - Thanks Mark Frauenfelder!

And today James Martin of CNet gaves us a great writeup with lots of embedded images!

Thanks James!

We were boing boinged last week - Thanks Mark Frauenfelder!

And today James Martin of CNet gaves us a great writeup with lots of embedded images!

Thanks James!

Enhance: The unofficial theme of the GigaPan and NanoGigapan Projects

This could be the unofficial theme video for the GigaPan project...a serious of video clips from movies and TV with the command Enhance! Followed by video enhancement.

GigaPans, and NanoGigapans let us zoom in-which is a lot like 'Enhance!'

At the Last Etech in 2009 Julian Bleecker did a great talk on Design Fictions. Brutally summarized, the idea is that the best way to present a new technology (real, or imagined!) is to assume that the technology exists, and to insert it into a work of fiction.

As an audience we are trained to the suspension of disbelief in watching stories, and so we accept the technology, and we experience the characters as they interact with the technology to advance the story.

The quintessential example is the motion controlled displays in _Minority Report_. According to Julian (or to my memory of Julian's talk :-) A technologist worked with the actors to develop a coherent gestural language. To develop something which felt like it made sense to the actor, and to the character who the actor was attempting to inhabit.

The visuals were all faked. The system didn't have to react to the actual gestures, that is what special effects are for! But the experience of the actor in creating this language, and the experience of the audience in experiencing that interface as an integral aspect of a compelling narrative were real.

And this process of creating a technology first in fiction works. It works much better as a method of conveying the possibilities of new technologies than doing a demo at a trade show or conference.

And in many ways it is also harder for a technologist to create this sort of Design Fiction than to just write some code or to create a product. Deciding that we should have a device which, for example, tracks where we are and displays that in 'useful' ways on a map, along with annotations about our mood, and biometric data, and ambient data, is easy.

And designing such a device is relatively easy.

But designing it to actually be something which even alpha geeks, let alone average people, can use is very very hard.

Christian Nold created Biomapping at about the same time as Schuyler Erle and I were working on related ideas. Hell, it is even possible that we predated him :-) But getting any device to function is hard. And even now, to the best of my knowledge, Christian has created beautiful maps and proofs of concept, but has not yet penetrated into even the geek community.

Julian wrote about Design Fictions a year ago. It is well worth reviewing.

GigaPans, and NanoGigapans let us zoom in-which is a lot like 'Enhance!'

At the Last Etech in 2009 Julian Bleecker did a great talk on Design Fictions. Brutally summarized, the idea is that the best way to present a new technology (real, or imagined!) is to assume that the technology exists, and to insert it into a work of fiction.

As an audience we are trained to the suspension of disbelief in watching stories, and so we accept the technology, and we experience the characters as they interact with the technology to advance the story.

The quintessential example is the motion controlled displays in _Minority Report_. According to Julian (or to my memory of Julian's talk :-) A technologist worked with the actors to develop a coherent gestural language. To develop something which felt like it made sense to the actor, and to the character who the actor was attempting to inhabit.

The visuals were all faked. The system didn't have to react to the actual gestures, that is what special effects are for! But the experience of the actor in creating this language, and the experience of the audience in experiencing that interface as an integral aspect of a compelling narrative were real.

And this process of creating a technology first in fiction works. It works much better as a method of conveying the possibilities of new technologies than doing a demo at a trade show or conference.

And in many ways it is also harder for a technologist to create this sort of Design Fiction than to just write some code or to create a product. Deciding that we should have a device which, for example, tracks where we are and displays that in 'useful' ways on a map, along with annotations about our mood, and biometric data, and ambient data, is easy.

And designing such a device is relatively easy.

But designing it to actually be something which even alpha geeks, let alone average people, can use is very very hard.

Christian Nold created Biomapping at about the same time as Schuyler Erle and I were working on related ideas. Hell, it is even possible that we predated him :-) But getting any device to function is hard. And even now, to the best of my knowledge, Christian has created beautiful maps and proofs of concept, but has not yet penetrated into even the geek community.

Julian wrote about Design Fictions a year ago. It is well worth reviewing.

Friday, March 12, 2010

Big plans for small things

Randy Sargent, Gene Cooper, Rich Gibson and I (Jay Longson) are working towards developing a suite of tools to aid in making these macro/micro/nano gigapans. We're hoping to very soon put together a standard recipe to enable people to adapt their own microscopes to take similar imagery. Our goal is for this to become a DIY project with differing levels of complexity based on the makers knowledge and skills. On one end of the spectrum will be a kit with all the necessary parts to create a gigapan enabled microscope, on the other end will be makers using some of the tools we develop here to modify their own microscopes. We hope to publish the "how to" through Make magazine. We aim to get these tools into the hands as as many people as possible, research scientists and kids alike. Stay tuned for more information.

Thursday, March 11, 2010

Giant Penny!

Here is a slightly lower magnification (~200x) micro-gigapan of the US penny. It was composed from 320 images, making a total image size of 600 megapixles. It's neat to ponder which marks on Lincoln's face are artifacts of the penny making process and which were intentionally etched.

"Will all great Neptune's ocean wash this blood clean from my hand?"

This micro-gigapan was shot at a magnification of nearly 2000x. The image is of a blood smear on a piece of single crystal silicon. While the specimen was relatively flat, there is still enough variation in height to make some regions out of focus due to the severely limited depth of field at this magnification. Clearly some focus stacking is in order.

Micro-gigapan setup

We've made this little video to show off the micro-gigapan in action.

This setup consists of:

• Computer running some code written in Processing to control the xyz stage and the camera.

• Prior ProScan xyz stage to control the specimen position relative to the microscope.

• Lecia DMLM light microscope.

• Canon PowerShot S5 IS being triggered using the Canon Hack Development Kit (CHDK).

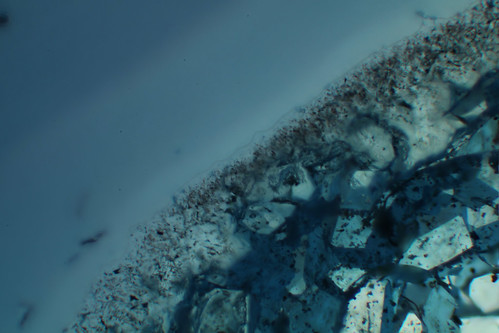

First micro-gigapan; bee wing on tape and silicon

Finally we've made the push to get our optical microscope working as a micro-gigapan! Here is our first attempt. There's obviously some problems with the vignetting due to the microscope-camera adapter, though this should be solvable.

Subscribe to:

Comments (Atom)